Everything You Need To Know About F1-score

Published:

Introduction

The $F_1$ score is the harmonic mean of the percision and recall. It thus symmetrically represents both precision and recall in one metric.

The highest possible value of $F_1$ score is 1.0, indicating perfect precision and recall, and the lowest value is 0, if either precision or recall are zero.

Definition

Harmonic Mean

In mathematics, the harmonic mean is one of several kind of average. The harmonic mean $H$ of the positive real numbers $x_1, x_2,…, x_n$ is defined to be

\[H(x_1, x_2, ...., x_n) = \frac{n}{\frac{1}{x_1} + \frac{1}{x_2} + ... + \frac{1}{x_n}} = \frac{n}{\sum_{i=1}^{n}\frac{1}{x_i}}\]Precision and Recall

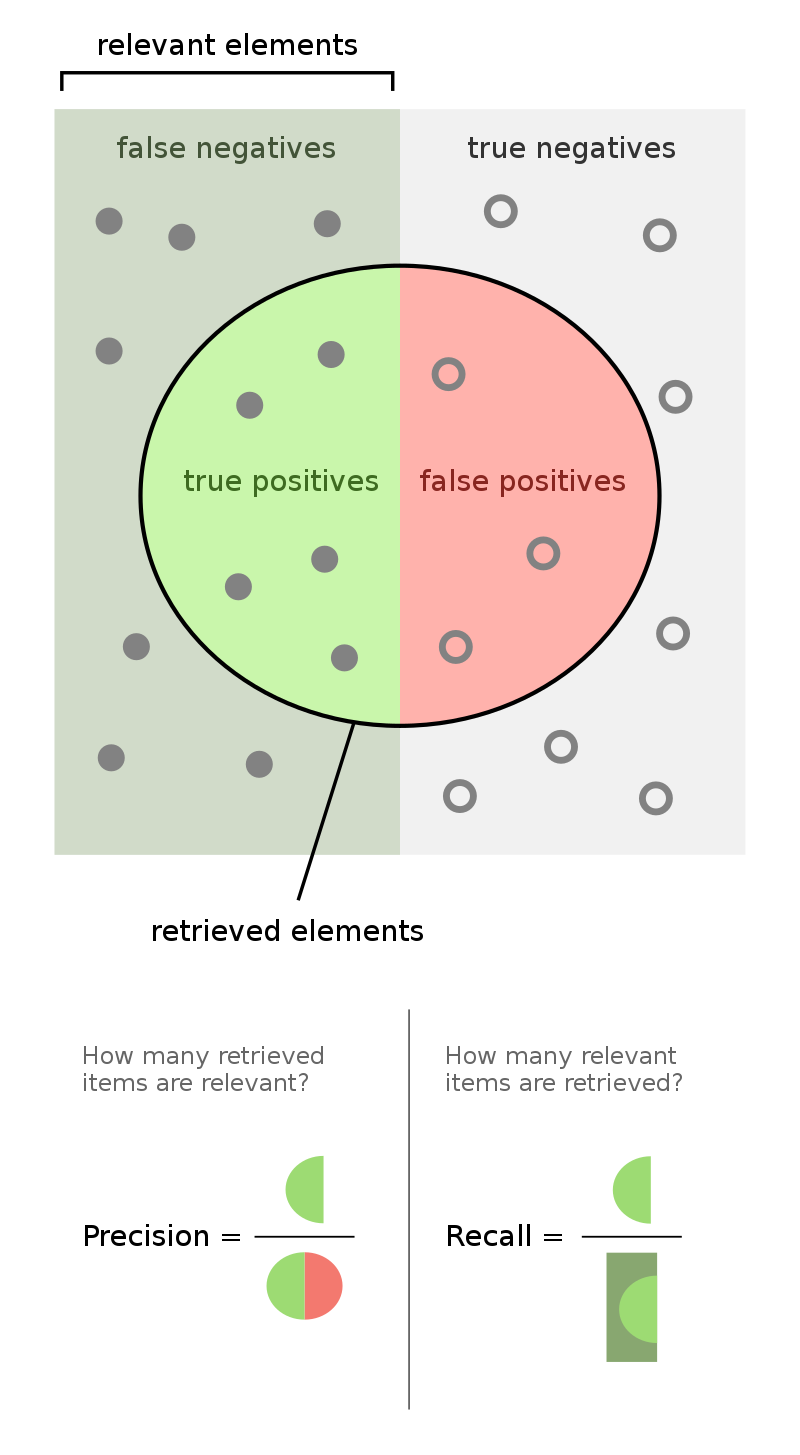

Precision ($P$) is defined as number of true positives ($TP$) over the number of true positives plus the number of false positives ($FP$)

\[P = \frac{TP}{TP+FP}\]Recall ($R$) is defined as number of true positives ($TP$) over the number of true positives plus the number of false negatives ($FN$)

\[R = \frac{TP}{TP+FN}\]$F_1$ score is defined as the harmonic mean of precision and recall

\[F_1 = \frac{2}{\frac{1}{P} + \frac{1}{R}} = 2 \times \frac{P \times R}{P+R}\]The relationship between recall and precision can be observed through Average Precision ($AP$)

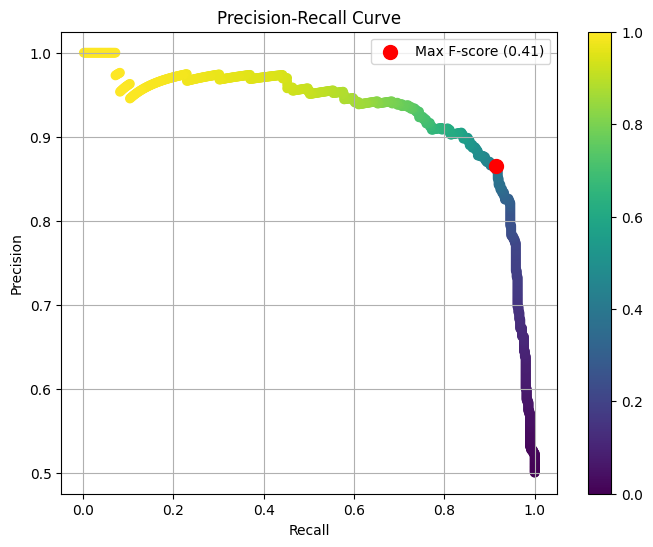

\[AP=\sum_n(R_n-R_{n-1})P_n\]where $P_n$ and $R_n$ are the precision and recall at the $n^{th}$ threshold. A pair $(R_k, P_k)$ is referred to as an operating point as figure below

$F_1$ in binary classification tasks

>>> from sklearn import metrics

>>> y_pred = [0, 1, 0, 0]

>>> y_true = [0, 1, 0, 1]

>>> metrics.precision_score(y_true, y_pred)

1.0

>>> metrics.recall_score(y_true, y_pred)

0.5

>>> metrics.f1_score(y_true, y_pred)

0.66...

$F_1$ in multiclass and multilabel classification tasks

Precision, Recall, $F_1$ can applied to each label independently. After, There are a few ways to combine results across labels, specified by the average arguments of scikit-learn

>>> from sklearn import metrics

>>> y_true = [0, 1, 2, 0, 1, 2]

>>> y_pred = [0, 2, 1, 0, 0, 1]

>>> metrics.precision_score(y_true, y_pred, average='macro')

0.22...

>>> metrics.recall_score(y_true, y_pred, average='micro')

0.33...

>>> metrics.f1_score(y_true, y_pred, average='weighted')

0.26...

>>> metrics.fbeta_score(y_true, y_pred, average='macro', beta=0.5)

0.23...

>>> metrics.precision_recall_fscore_support(y_true, y_pred, beta=0.5, average=None)

(array([0.66..., 0. , 0. ]), array([1., 0., 0.]), array([0.71..., 0. , 0. ]), array([2, 2, 2]...))

Conclusions

The $F_1$ score is often used in machine learning. However, the $F_1$ do not take True negatives $(TN)$ into account.